New Stable Diffusion 3 release excels at AI-generated body horror

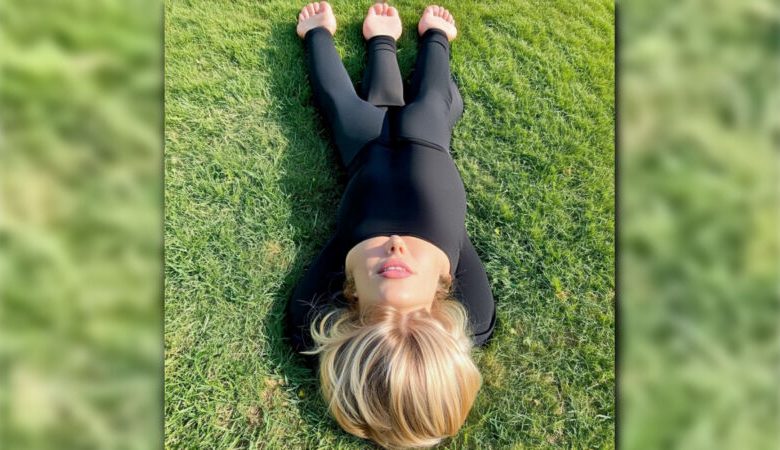

Enlarge / An AI-generated image created using Stable Diffusion 3 of a girl lying in the grass. (credit: HorneyMetalBeing)

On Wednesday, Stability AI released weights for Stable Diffusion 3 Medium, an AI image-synthesis model that turns text prompts into AI-generated images. Its arrival has been ridiculed online, however, because it generates images of humans in a way that seems like a step backward from other state-of-the-art image-synthesis models like Midjourney or DALL-E 3. As a result, it can churn out wild anatomically incorrect visual abominations with ease.

A thread on Reddit, titled, “Is this release supposed to be a joke? [SD3-2B],” details the spectacular failures of SD3 Medium at rendering humans, especially human limbs like hands and feet. Another thread, titled, “Why is SD3 so bad at generating girls lying on the grass?” shows similar issues, but for entire human bodies.

Hands have traditionally been a challenge for AI image generators due to lack of good examples in early training data sets, but more recently, several image-synthesis models seemed to have overcome the issue. In that sense, SD3 appears to be a huge step backward for the image-synthesis enthusiasts that gather on Reddit—especially compared to recent Stability releases like SD XL Turbo in November.